InfiniBand (IB) is a computer network communications standard designed to handle the most demanding workloads and is often used in high-performance computing (HPC).

InfiniBand offers several key advantages over Ethernet, especially in contexts that require high data throughput and low latency.

Let's understand the basics of InfiniBand and explore the world of InfiniBand network with NVIDIA Quantum.

|

| NVIDIA Quantum InfiniBand Platform |

Exploring the World of InfiniBand Network with NVIDIA Quantum

In the realm of high-performance computing (HPC) and enterprise data centers, the InfiniBand network stands out as a pivotal technology, achieving unprecedented levels of performance, scalability, and efficiency. Developed by NVIDIA Quantum, InfiniBand is designed to tackle the most demanding workloads, facilitating rapid data transfer and communication between servers, storage systems, and other components within a network. This advanced interconnect technology is characterized by its high throughput and low latency, making it an ideal solution for applications requiring intensive data analysis, machine learning, and scientific computation.

The InfiniBand architecture also supports a broad spectrum of advanced features, including Quality of Service (QoS), adaptive routing, and congestion control, which further enhance its efficiency and reliability in complex network environments.

What is InfiniBand Network?

Understanding the Basics of InfiniBand

InfiniBand is a high-performance, packet-switched network communications protocol used predominantly in high-performance computing (HPC). It provides significant advantages in both data throughput and latency over traditional interconnects, such as Ethernet, making it particularly well-suited for environments where performance and scalability are critical.

InfiniBand operates on a point-to-point basis, using a switched fabric network topology, a method that differs significantly from the hierarchical, routed approach used by Ethernet networks. This architecture enables direct and highly efficient communication paths between end points, significantly reducing data transfer times and enhancing overall network performance.

Benefits of InfiniBand compared to Ethernet

InfiniBand offers several key benefits over Ethernet, particularly in contexts requiring high data throughput and low latency.

Firstly, the bandwidth available with InfiniBand is substantially higher than what Ethernet can offer, with speeds reaching up to 200 Gbps per port. This is critical for applications involving large-scale data processing and transmission.

Secondly, InfiniBand exhibits lower latency than Ethernet due to its design and protocol efficiency, which is essential for applications where response time is critical, such as real-time data analytics and transaction processing.

Additionally, InfiniBand’s support for Remote Direct Memory Access (RDMA) allows for faster data movement between servers and storage devices without engaging the CPU, further enhancing performance and efficiency.

Key Components of an InfiniBand Network

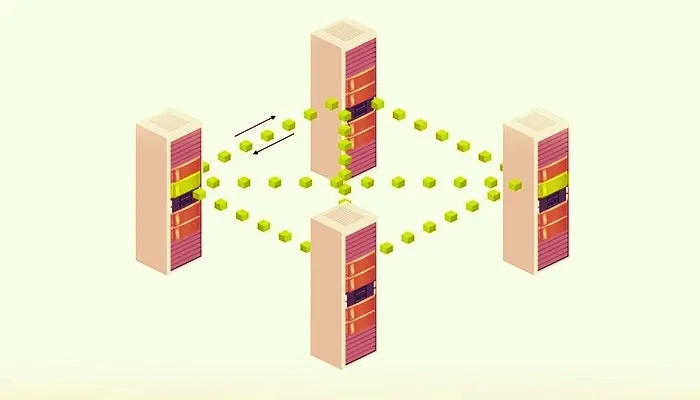

An InfiniBand network comprises several key components, including adapters, switches, and cables, which together form a complete InfiniBand fabric. Host Channel Adapters (HCAs) are installed in servers or storage units, enabling their connection to the InfiniBand network.

InfiniBand switches then facilitate the routing of communications between HCAs, ensuring optimal data paths are used to maintain high performance and reliability. Additionally, InfiniBand requires specific cables and connectors, designed to support its high-speed data transfer rates. The interplay of these components within an InfiniBand network architecture is what enables it to deliver unparalleled performance and scalability, making it an excellent choice for demanding computational environments.

InfiniBand vs Ethernet: A Comparative Analysis

Performance Differences between InfiniBand and Ethernet

When comparing the performance of InfiniBand and Ethernet, the distinctions are quite pronounced. InfiniBand often surpasses Ethernet in both speed and latency, providing a bandwidth capability of up to 200 Gbps per port, compared to the 100 Gbps Ethernet offers at its peak. This significant difference in speed makes InfiniBand an ideal choice for environments that demand high throughput for data-intensive applications. In terms of latency, InfiniBand offers markedly lower figures due to its efficient transport protocols and support for Remote Direct Memory Access (RDMA), which allows for direct memory access from one computer into that of another without involving either one's operating system. This reduces CPU overload and significantly improves response times, making it a preferable option for time-sensitive operations.

Scalability and Flexibility in InfiniBand Networks

InfiniBand networks exhibit exceptional scalability and flexibility, which are essential for supporting growing and diverse computational demands. The architecture of an InfiniBand network is inherently designed to support a scalable and flexible environment. Its ability to support thousands of endpoints within a single subnet, coupled with the provision of partitioning and quality of service features, allows for the creation of vast, yet manageable, high-performance network infrastructures. These networks can be tailored to meet the specific needs of an application, whether it involves high-performance computing (HPC), data center operations, or cloud computing services. The architecture's flexibility also enables efficient resource allocation and reallocation, ensuring that dynamic workloads can be supported with minimal manual intervention. This scalability and flexibility make InfiniBand networks adept at handling not just current technological demands but also anticipating future requirements.

Advantages and Applications of InfiniBand

High-Performance Computing with InfiniBand

High-Performance Computing (HPC) environments require robust and efficient networking solutions to handle vast volumes of data processing and high-speed data transfers.

InfiniBand, with its high throughput and low latency characteristics, emerges as an indispensable technology in these settings. It enables HPC systems to achieve their full computational potential by minimizing bottlenecks in data communication, thereby accelerating the time to solution for complex computations.

The adaptation of InfiniBand in HPC environments supports critical scientific research, including weather modeling, quantum mechanics simulations, and genomics, by providing a scalable and efficient communication framework.

InfiniBand in Data Centers and Clusters

In today’s data-centric world, data centers and clusters demand networking infrastructures that can support the rapid exchange of information between servers and storage systems.

InfiniBand’s architecture, offering high bandwidth and low latency, is particularly suited for data center environments, where performance and scalability are paramount. Its ability to efficiently handle server-to-server and storage networking makes it a preferred choice for cloud service providers, financial services, and large-scale internet companies seeking to optimize their operations and improve service delivery to end-users.

InfiniBand Adoption in the HPC Industry

The adoption of InfiniBand within the HPC industry has been driven by its unmatched performance metrics, reliability, and scalability.

Numerous supercomputing sites globally have integrated InfiniBand to enhance their computational and networking capabilities. This widespread acceptance is a testament to InfiniBand’s ability to meet the rigorous demands of HPC applications, positioning it as a leading networking technology in the arena of advanced computing.

The continuous evolution of InfiniBand standards ensures that it remains at the forefront of networking technologies capable of supporting the next generation of HPC systems.

Exploring InfiniBand HDR Products and Interconnects

The Evolution of InfiniBand HDR Technology

InfiniBand HDR (High Data Rate) represents a significant advancement in the realm of networking technologies, pushing the boundaries of speed and efficiency in data transfer. HDR InfiniBand, boasting data rates of up to 200 Gb/s per port, marks a pivotal leap forward from its predecessors, offering an unparalleled increase in bandwidth and reduction in latency. This evolution underscores InfiniBand's commitment to keeping pace with the exponential growth of data and the demanding requirements of modern High-Performance Computing (HPC), artificial intelligence (AI), and machine learning workloads.

The introduction of HDR InfiniBand is not merely an incremental improvement but a transformational change that enhances scalability, reliability, and performance of data centers and HPC environments.

Benefits of InfiniBand Interconnect Solutions

InfiniBand interconnect solutions stand out for their ability to deliver high levels of performance, scalability, and efficiency in a cost-effective manner. By facilitating faster data throughput and lower latency, InfiniBand enables organizations to process large volumes of data more swiftly and accurately, driving advancements in research, scientific discovery, and real-time analytics.

Furthermore, InfiniBand's architecture supports advanced features such as congestion control, adaptive routing, and Quality of Service (QoS), which contribute to improved network reliability and management. Its inherent scalability makes it perfectly suited to meet the dynamic needs of expanding data centers and complex computing systems.

Overall, the adoption of InfiniBand interconnect solutions empowers organizations to achieve their operational and innovation benchmarks by significantly enhancing data transfer efficiency and computational power.